Today’s turbulent social and economic landscapes are creating nuances in communication like never before. Add to that the fact that so many people are working and learning remotely – and thus using virtual communication platforms like text and e-mail at increasing rates – and the importance of understanding the complexities of the English language and associated intent become very clear.

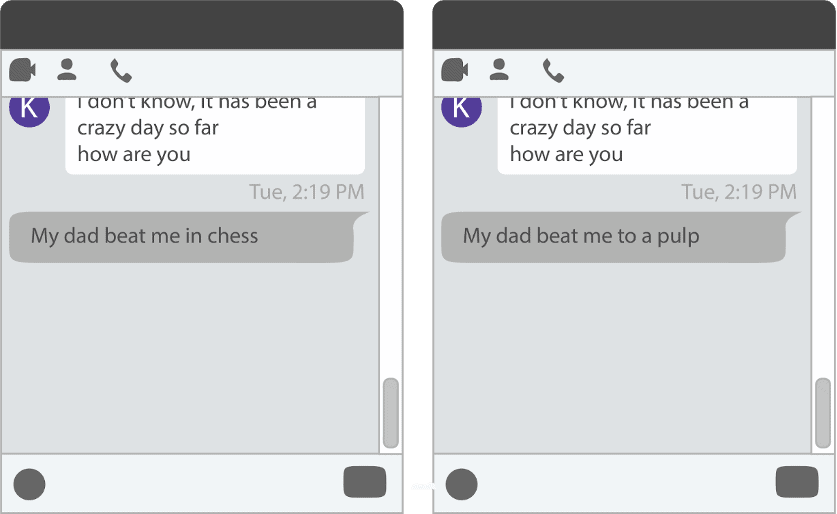

Imagine, for example, that your friend sent you something like this:

The Science of Linguistics

Linguistics can be used to make digital analysis of language more efficient. Detect’s platform uses logic-based processes that “understand” various pieces of language in a more systematic manner, paying attention to the following:

- Sentiment

Whether the overall emotional gauge of the words in a sentence is positive or negative. Clues about sentiment can also be derived from emojis. - Same word, different meaning

Sentences may have the same grammatical structure, but the meanings can be entirely different. Consider the ‘beat’ example above. While one sentence is not life-threatening, the other presents a potentially dangerous situation. Another example is ‘shoot,’ which can mean with a camera or with a gun. - Tense

Using tense, we can account for when the harm occurs. An author may be writing about an event that will occur in the future (e.g., a planned school shooting), a threatening circumstance that is occurring in real-time (e.g., having a gun in a backpack), or a traumatic experience that happened in the past (e.g., sexual assault or abuse at home). Depending on the topic, tense is vital for understanding urgency.

By applying linguistic concepts such as sentence structure, word meaning, tense and tone (or sentiment), we can help analytical models better understand whether a particular communication is threatening or concerning.

Hyperbole

Hy·per·bo·le

/hīˈpərbəlē/ noun – exaggerated statements or claims not meant to be taken literally.

Let’s dive a little deeper to see how much a single sentence structure can vary. By changing the subject of the following sentence, we greatly alter the potential harm it may communicate.

What if the subject is

- a human

In this case, the post may be harmful, although not necessarily. Further context is required to determine the appropriate follow-up. - a social-emotional hardship

For example, if the object noun was depression or anorexia, the post may require further attention. - a wide variety of nouns that would indicate hyperbole

For example, if the subject is cat, exam, period, vacation, or dentist, the post reads as humorous, sarcastic, or exaggerative rather than potentially dangerous.

Word Meaning

“You shall know a lot about a word from the company it keeps.”

– John Rupert Firth, 1957

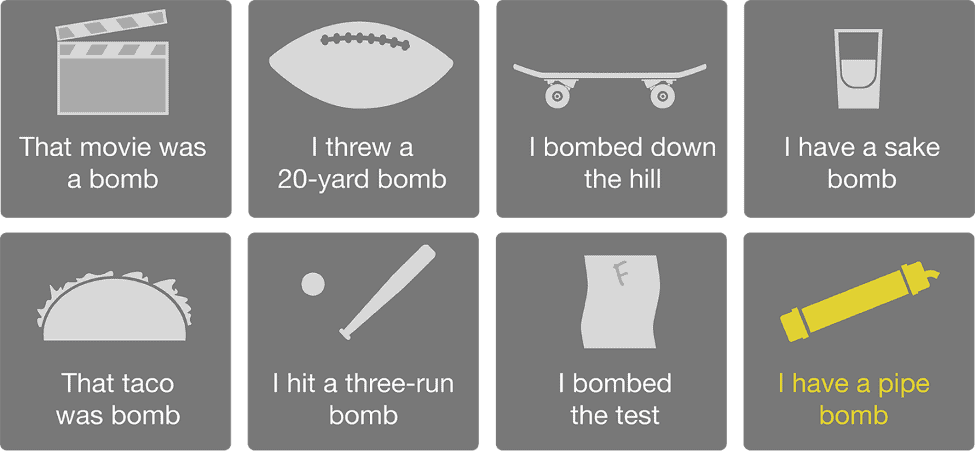

There are 55 definitions of the word ‘shoot,’ ranging from very dangerous to harmless. A simple keyword-based threat detection system would return content about photography, sports, sending a text or email, and more. A robust tool like Detect’s Language Engine needs to have much more complexity in its logic than simply looking for any single keyword.

Instead of relying on the simple presence of the word ‘shoot,’ we need to determine meaning by looking at adjacent words. Shooting a video or shooting hoops carry nonthreatening meanings, while shooting guns could be potentially harmful.

This determination must be done for every potentially threatening word. Take a look at the various meanings conveyed through the word ‘bomb.’

Our Solution

To prevent you from receiving content about tacos and basketball, effective platforms must use a two-pronged linguistic approach that includes

(1) syntax-based searches over keyword-based, and

(2) using context from surrounding words. This process is automated using a network of word pairs, trios, or quadruplets that occur together with statistical significance.

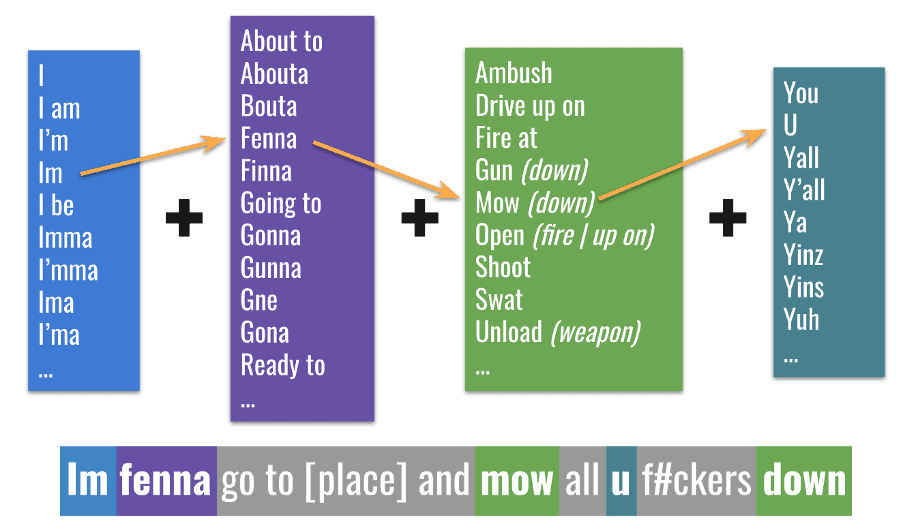

Instead of a keyword-based system, also called a ‘Bag of Words’ approach, our linguists build complete syntaxes. A match to these syntaxes requires a very specific linguistic structure that considers characteristics such as ordering, proximity, near terms and exclusions. This is how our platform can scan for a wide range of relevant language while simultaneously limiting erroneous matches.

Research, development and maintenance of these syntaxes is managed using the science of linguistics. Currently, our platform contains 1,800 syntaxes that generate more than 1.5 billion possible match combinations.

The following example shows an excerpt from one of these syntaxes, which alone generates tens of thousands of linguistic combinations.

Threat Assessment

Imagine you are responsible for manually identifying and assessing harmful or concerning content made online or through an official school account. That task would be far too labor-intensive, timely and unsustainable. Faculty members must be able to identify, assess and then react to potential threats as thoroughly and as quickly as possible to mitigate risk and prevent harm.

Linguistic and machine-learning models must be innovative, robust and continually updated to substantially reduce the number of posts your threat assessment team sifts through. Our models are able to reduce the number of posts that need attention by a factor of 3,000, which saves highly valuable time.

We still need the human touch. While linguistics allows for efficiency, you know your students best, and manual review and triage of digital communications is vital. Only you will know whether a student who says, “I am going to bomb this election” fears they’ll fail their run for Student Council President or if they mean something much more dangerous.

Language is incredibly complex, and it changes constantly. Today’s social, emotional and related challenges demand proactive ways to analyze and identify signals of potential harm within the digital communication methods we use most often. Detect’s Data Science team can help you recognize and address the content that matters most to your students’ safety and overall wellbeing. This, in turn, helps you maintain a safe and secure learning environment in which everyone can reach their full potential and thrive.

Key takeaways

- The complexity of language makes understanding meaning — especially in digital conversations — very difficult.

- Two posts or chats can be identical in structure yet communicate two vastly different messages.

- Combining the science of linguistics and machine learning is highly effective in identifying potentially harmful language.

- In the end, it is human intelligence that ultimately knows whether a signal is actionable or benign.

About the Authors

Jess Blier

Analytical Linguist

Jess Blier, Analytical Linguist for Navigate360, is responsible for the development and optimization of our technology’s syntactic models. Jess specializes in analyzing threatening texts and emergency communications, with a Master’s degree in Forensic Linguistics and experience working with distinguished professionals in capital exoneration cases.

Polly Mangan

Director of Data Science

Polly Mangan, Director of Data Science for Navigate360, is responsible for leading the Data Science and Analytical Linguistics teams. Her focus is ensuring our technology is innovative, scalable, based in science, and built in a way that is ethical and respects privacy. Polly is a technical professional with a Master of Science focused in Applied Mathematics and 10 years of experience in the field of Data Science.

Kendall Fortney

Product Manager

Kendall Fortney is a product manager for Navigate360 and is responsible for the company’s social media and e-mail scanning technologies for schools. Kendall helps develop product strategy, growth plans and adoption of new technologies with the data science team.

Additional Detection & Prevention Resources for Schools

Take advantage of these additional free resources to support your threat detection & prevention efforts: