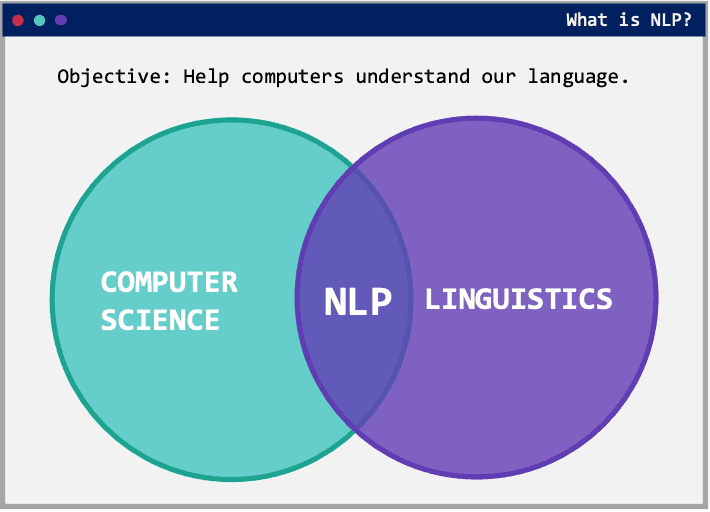

What lies at the intersection of the fields of linguistics and artificial intelligence, and how can machines make sense of human language to help leaders keep their organization safe?

In today’s world, it is more important than ever for organizations to maintain a healthy grasp on sentiment to stay ahead of threats and harmful intentions. It is nearly impossible to stay apprised of digital conversations across a multitude of channels, so we must rely on technology to scan that sea of communication.

Human language is complex, however, lacking explicit rules and undergoing constant evolutions with countless ambiguities. This makes it extremely difficult to teach machines to understand context without human supervision. This is the reason Navigate360’s Detect uses state-of-the art technology coupled with expert linguistics and data analyst professionals to cut through the complexity.

In this article, we will explore what lies at the intersection of the fields of linguistics and artificial intelligence (AI) and explain how machines can make sense of human language, ensuring we help leaders stay ahead of threats in the vast circulation of digital communications.

Let’s Define Natural Language Processing

Natural Language Processing, usually shortened as NLP, is a broad branch of artificial intelligence that focuses on the interaction between computers and human languages. Through various techniques, NLP aims at reading, deciphering and making sense of language.

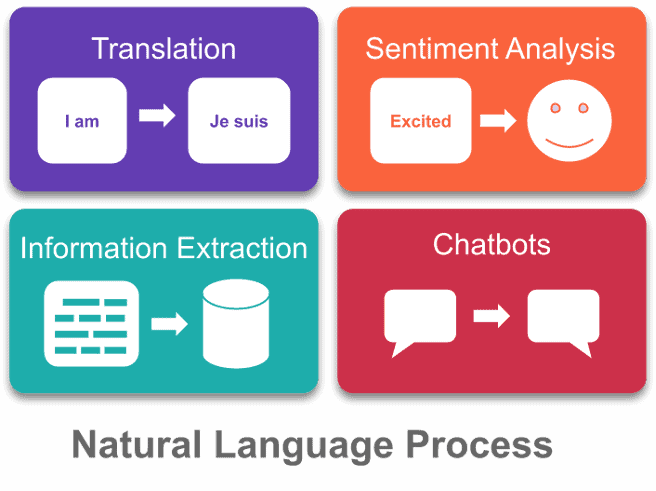

Since language is embedded in almost every aspect of our lives, Natural language processing is one of the most active fields of research in AI. Some of the most common applications of natural language processing include:

- Virtual assistants and chatbots (Siri, Alexa, Google Assistant): These systems use speech recognition to convert the sound of our voice into text that is then analyzed and interpreted using natural language processing techniques.

- Translations: Google Translate is one of the best examples of the use of natural language processing. It is impossible to collect all of the rules from the 108 languages that Google Translate covers. Instead, natural language processing techniques are used to process content to translate and then convert it into the target language.

- Text summarization: The system first ingests a large volume of data (an entire book, for instance), extracts meaningful information, and then generates a summary of the ingested data. We use a version of this to extract trending topics and agglomerate similar online content.

- Spam detection: The detection of spam in your mailbox is one of a handful of natural language processing problems that researchers consider solved.

Can’t Machines Just Learn to Read Like We Did?

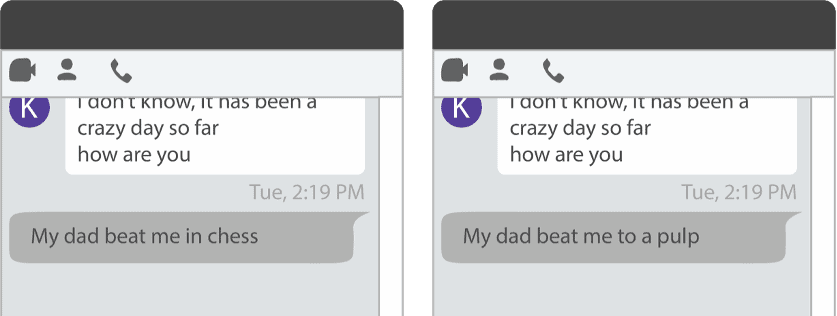

The fundamental nature of human language makes it difficult to master. In order to fully grasp the meaning of a word, one needs to know all the definitions of that word as well as how these meanings are affected by surrounding words. The human brain does this easily and in split seconds. Technology needs parameters to make those distinctions.

Here is an example in which most of the content of the text is identical between the two versions. Only the last few words depict the full picture:

In addition, human language is not fully defined with a set of explicit rules. Most would say that people use language as an art, not as science. Our language is in constant evolution; new words are created while others are recycled. Finally, abstract notions such as sarcasm are hard to grasp, even for native speakers. This is why it is important to constantly update our language engine with new content and to continuously train our AI models to decipher intent and meaning quickly and efficiently.

For all these reasons, our language represents the exact opposite of what mathematical models are good at. That is, they need clear, unambiguous rules to perform the same tasks over and over. Just as students learn with consistent boundaries and an evolving blended approach curriculum, so too does the machine learn with human supervision.

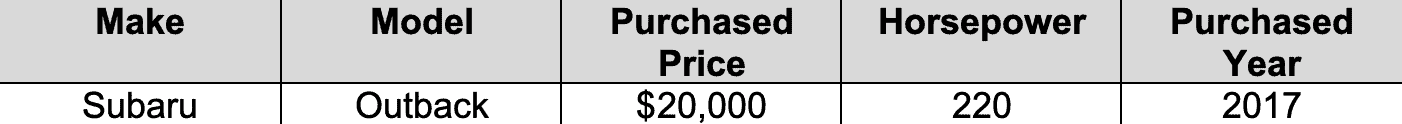

For instance, let’s imagine that you would like to estimate the price of a car and you have access to its technical data. Your database would look like this:

Then you would use each feature to increase or decrease the price of the car based on a benchmark value. This is a relatively simple problem to solve since the details can be summarized using trustworthy, numeric data.

Now, let’s imagine you’re answering a question that involves analyzing language instead of numeric data. How would you quantify the happiness of the following sentence?

“The weather was so nice this Saturday but because of COVID, we were not able to go skiing.”

One could start by isolating words based on their happiness score (which is not explicitly provided). In this case, the words “nice,” “Saturday” and “skiing” are probably associated with positive sentiment, while “COVID” would be associated with negative sentiment. But this approach does not account for the negation introduced by “not able to go” preceding “skiing.” Things are a lot trickier when dealing with text data.

How Does Natural Language Processing Work?

The essential step of natural language processing is to convert text into a form that computers can understand. In order to facilitate that process, NLP relies on a handful of transformations that reduce the complexity of the language.

Stop-Word Removal

Stop words are high-frequency words that add little or no semantic value to a sentence. The most common English stop words are “which,” “for,” “at” and “to.”

For instance, consider this sentence:

“It seems that all the students are looking forward to going back to school.”

…is converted to:

“It seems students are looking forward to going back school.”

Lemmatization

The human language relies on using inflected forms of words, that is, words in their different grammatical forms. NLP uses lemmatization to simplify language without losing too much meaning. This also simplifies the language the computer has to understand.

The lemma is the root form of a word. For example, the words “am, are, is, were, was, been” share the same lemma “be.”

The sentence:

“All the students are looking forward to going back to school.”

is lemmatized as:

“All the student be look forward to go back to school.”

Bag of Words

This is the most commonly used model that allows for the counting of all words in a piece of text. It reports the occurrence of each word, disregarding grammar and word order. These word frequencies, or occurrences, are then used as features for training a classifier just like in the example of our car pricing. This overly simplistic approach can lead to satisfactory results in some cases, but it has some drawbacks. For example, it does not preserve word order, and the encoded numbers do not convey the meaning of the words.

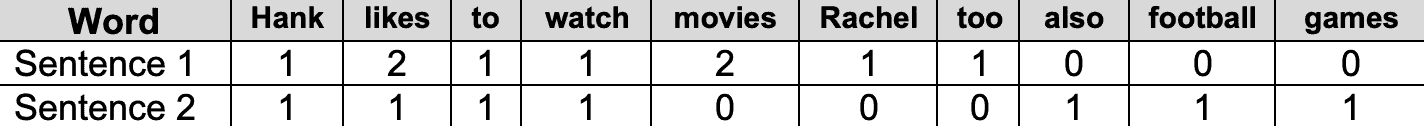

For example:

Sentence 1: “Hank likes to watch movies. Rachel likes movies too.”

Sentence 2: “Hank also likes to watch football games.”

…would be converted into:

Embeddings

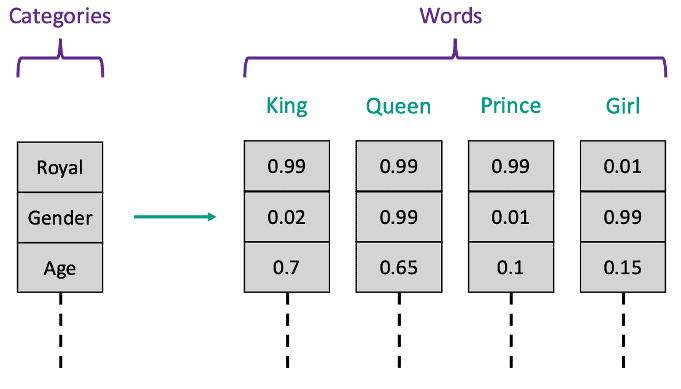

One of the most advanced techniques in natural language processing consists of encoding words using a series of numbers instead of just one. This process allows the encoding of the word to carry meaningful information. For example, if we were interested in evaluating words based on 3 criteria — “Royal,” “Gender” and “Age” — our embeddings could look like the following:

Storing the information using a longer sequence of numbers allows you to convey more meaning. By looking at the embedding values, we can see that the words “King” and “Queen” are very similar when it comes to the “Royal” and “Age” criteria, but they are on opposite ends of the “Gender” criterion.

How Natural Language Processing Is Used at Navigate360

NLP techniques are fully integrated into several of the services we provide. Our most notable achievements are the following:

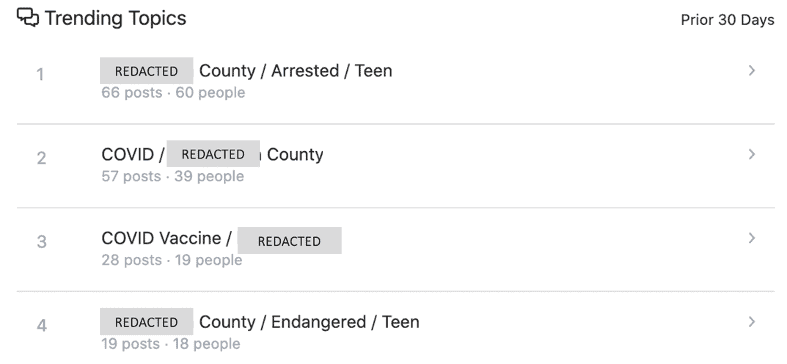

- Trending Topics: We use natural language processing to aggregate online conversations that we identify as being related. This gives our clients a summary of the trending topics being discussed, providing leaders with a sense of what resonates within their organization and what might help with safety and security initiatives.

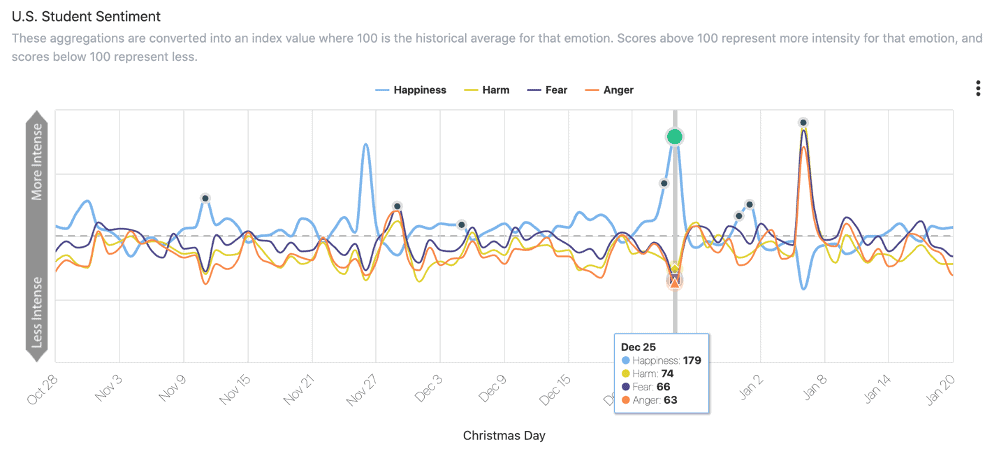

- Sentiment Analysis: Our platform analyses the content of public social media posts to quantify the overall mood of the U.S. student population. Our system can quantify a wide range of emotions, such as happiness, harm, fear and anger. This direct application of the NLP technique is called sentiment analysis and provides our clients with insights into the impacts of global events on American students, allowing leaders to get ahead of student needs.

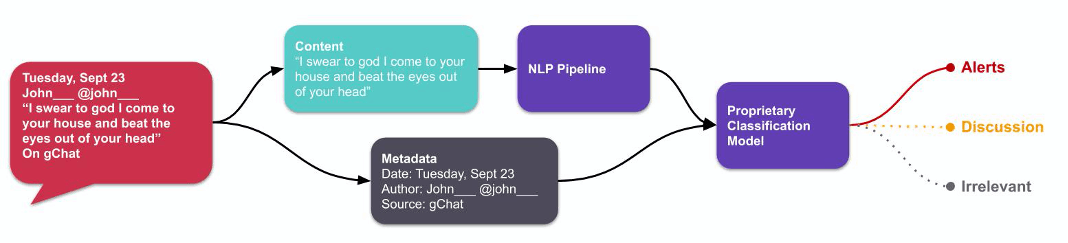

- Content Classification: Finally, our most complex system uses state-of-the-art natural language processing models instantaneously identify threats among the content we ingest. This model combines complex architecture used in Deep Learning along with simpler NLP techniques, such as sentiment analysis, tokenization and morphological segmentation.

The Power of Natural Language Processing

Detect’s language engine would not be as powerful as it is without the aid of NLP. As the field of natural language processing expands, we continue to make sure we are using the best new methodologies and tools available. Navigate360’s Detect has an unmatched ability to identify important signals in a huge amount of data and deliver these insights so they can be digested easily and acted upon if needed. We work to ensure our technology delivers what you need when you need it to help prevent the preventable.

Additional Resources to Help You Prevent the Preventable

Take advantage of these additional free resources to support your threat detection & prevention efforts: